- Sub-topics

Research & Measurement

As schools and districts launch new programs and practices, or look to extend existing ones, they often face a critical question: how will we know if it works?

At the same time, educators and leaders often want to know what the research says. When the field of research spans from peer-reviewed journal articles to practitioner-facing publications, it can be difficult to understand how to find credible, actionable information.

Implementing evidence-based practices, and identifying opportunities for improvement, requires coordinated action. Researchers, practitioners, administrators, and other stakeholders from diverse roles across school systems and support organizations all play a role in measurement projects.

At The Learning Accelerator (TLA), we understand that schools and districts might require a measurement project for a number of reasons:

To explain why something might be occurring (i.e., different patterns of student progress);

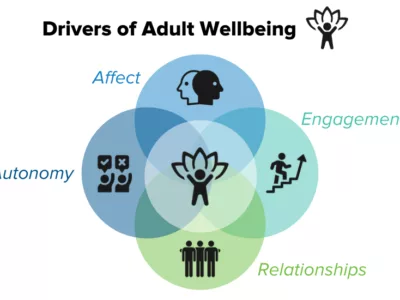

To explore a trend or phenomenon (i.e., adult wellbeing or student engagement in virtual settings); or

To understand the efficacy of an instructional strategy, new academic program, or piece of technology.

Unfortunately, research and practice often exist in silos. Educators and leaders look for what they hope works best for their students, and researchers design and measure programs that may or may not fit classroom realities. We also recognize that measuring what happens inside of schools can be complex and nuanced, so we believe in collecting and examining both quantitative (numbers) and qualitative (stories) data. Finally, we believe that research partnerships – where schools, systems, and organizations form long-term partnerships with research teams – exist as one solution to these challenges.

Select Practice Area

Research Strategies

This section explores three areas of measurement that schools and districts may tackle as they implement new practices and programs. Use the subtopics below to dig further into the three areas:

- Research That Informs Our Work – Learn from the Field: Educators and leaders often want to know what the research says. When the field of research spans from peer-reviewed journal articles to practitioner-facing publications, it can be difficult to understand how to find credible, actionable information. The body of literature behind our strategies and recommended resources includes learning science, neuroscience, leadership, and psychology.

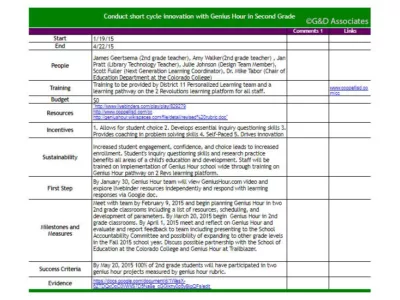

- Design a Pilot – Try Something New: Instead of making a huge commitment to a practice or program, smaller pilots create an opportunity to try something new, find out how it works in practice, and then make adjustments before committing to something at scale. Whether choosing a new instructional practice, curriculum model, piece of technology, or professional learning program, a pilot can help build evidence, gain buy-in, and demonstrate what this new thing may look like in your own context.

- Evaluate a Tool, Pilot, or Program – See How Something Works: Whether implementing a new reading curriculum, a different professional learning format, or even a new assessment platform, a critical question always emerges: how will we know if it worked? The answer requires measuring both the intended outcomes and the process to get there.

Clear Filters

0 strategy

0 strategies